CaaS Services Through AWS, Azure, and Google Cloud

On the 1st of December, 2020, as the world was preparing for the end of a turbulent year dominated by the COVID-19 pandemic among other things, AWS presented the cloud community with an early present. Container support for AWS Lambda functions. The ability to package and deploy Lambda functions as container images, hence allowing you to run your Lambda functions with the benefits that containers provide.

Serverless functions allowed the industry to get up and running with business code in an instant. The novel computes service provided the ability to break away from the complexity of setting up complex infrastructure and configurations, along with the scalability and related operations of running in production.

However, serverless offerings are in their current state, greatly limiting. For example, when attempting to use a programming language not supported by the serverless offering you’re using, or when typing to import libraries. AWS Lambda layers targets this problem by allowing the user to add their required libraries and external code bases as ‘layers’ on top of your AWS Lambda function. Similarly Azure provides Binding Extensions which is used as an open-source model for the community to build new types of bindings that can then be brought into your Azure Functions.

Unfortunately, these methods have limitations on how they may be leveraged. Hence the new container image support for AWS Lambda functions follows AWS’ goal of providing workarounds and solutions in mitigating the barriers that the cloud community is facing.

Such a stride to combine and balance the capabilities of both serverless and container offerings is not a new one. The issue is that the benefits derived from serverless makes the compute paradigm extremely attractively, but also results in additional limitations to flexibility and use. It is the age-old proverb of having a cake and not being able to eat it.

However, cloud vendors are now striving to defy this as they race to provide various cloud compute services and enhance these services with features allowing convenient flexibility. Each of these services providing its own trade-offs considering the various permutations that can be made when considering listing these trade-offs. The motive here is to provide a diverse enough list of service offerings, along with capabilities in these services, to ensure that they can best meet the requirements of the cloud market with its diverse set of customers.

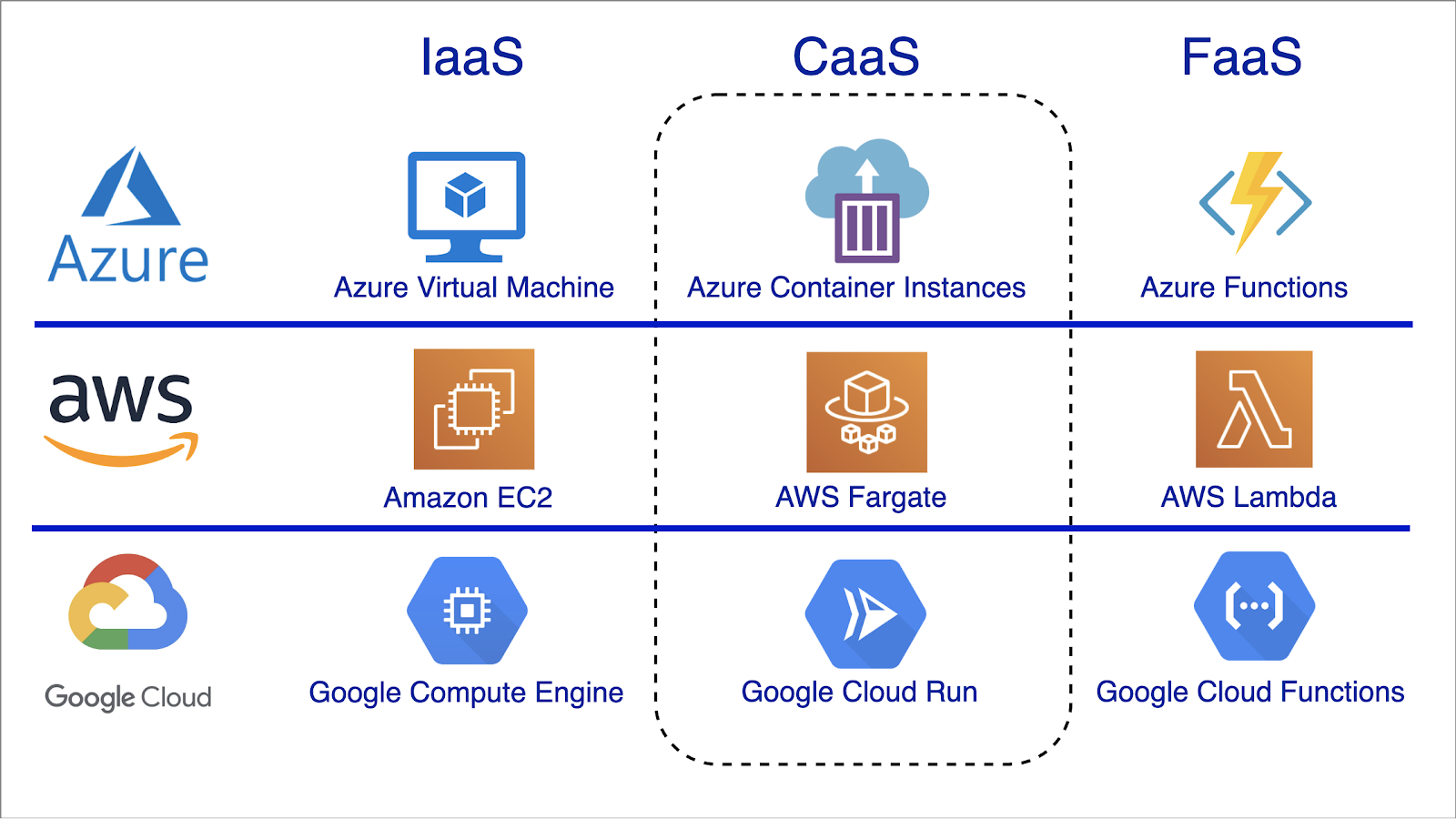

For example, AWS provides EC2 containers, AWS Lambda functions, and AWS Fargate. Azure and GCP offer similar services such as Azure Container Registry, Azure Container Instances, Google Cloud Run, and more. Additionally, we can note functionality such as AWS Lambda layers, AZure Binding Extensions, and various other incremental improvements.

As can be seen, cloud vendors have advanced services across compute paradigms and the paradigms themselves. Apart from the improvements that we have noted in the serverless offerings of these vendors we have also seen the rise of serverless containers as a paradigm, Containers as a Service (CaaS). Therefore, the goal of this article is to explore the concept of Containers as a Service and acknowledge the current services available in the cloud by the prominent cloud vendors, AWS, Azure, and Google Cloud.

Peering Into the CaaS Paradigm

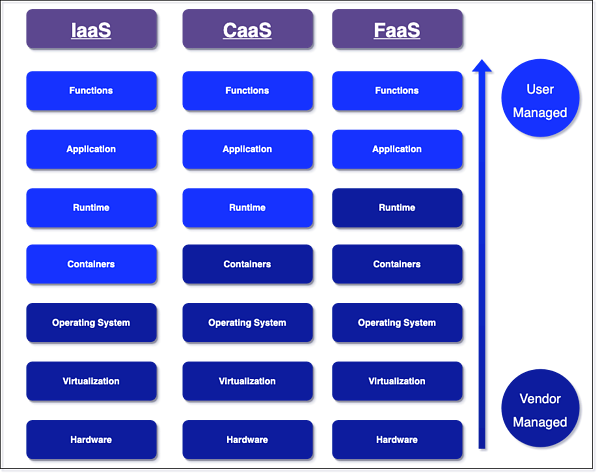

One of the attractive promises of cloud computing is the major reduction in the complexity of managing server hardware. With the rise of cloud offerings, we could simply delegate hardware management responsibilities away to the cloud vendors. However, we now had to learn how to provision the virtual servers over cloud vendor platforms, introducing a new type of operational burden. Infrastructure as a Service (IaaS) has since evolved over the years from Containers-as-a-Service (CaaS) to finally Functions-as-a-Service (FaaS). These different paradigms all offer different levels of abstraction, with FaaS being the easiest paradigm to use as it has the most levels of abstraction.

Naturally, it would be expected for cloud developers to be rushing towards FaaS services, which have since materialized as serverless offerings. However, there is a catch. With more abstraction leading to lesser operational burdens, one has to sacrifice flexibility and endure operational limitations.

For example, with Amazon EC2 Instances, which can be considered as an IaaS paradigm, you have to specify rules, networking security monitoring, and much more. Apart from container orchestration, the major issue also is auto-scaling with defining scaling rules at a container level being extremely arduous. All this extra burden in operation does mean however that you are allowed to configure your environment almost in any way you would like. So you could choose any runtime for example, not have to worry about timeout limitations, and also define granular auto-scaling rules that best fit your business needs.

However, with this added flexibility, you would lose out on the three major characteristics of serverless, which also act as the compute model’s most prime benefits. These are as follows:

- Server management is abstracted to the vendor.

- Pay-as-you-go model where you only pay for what you use.

- Automatically scalable and highly available.

This is where the CaaS paradigm aims to hit the sweet spot by conveniently sitting in between containers and serverless services. To understand how to let us consider CaaS offerings from the three major cloud vendors operating in the field.

Floating Containers in the Cloud

AWS Fargate

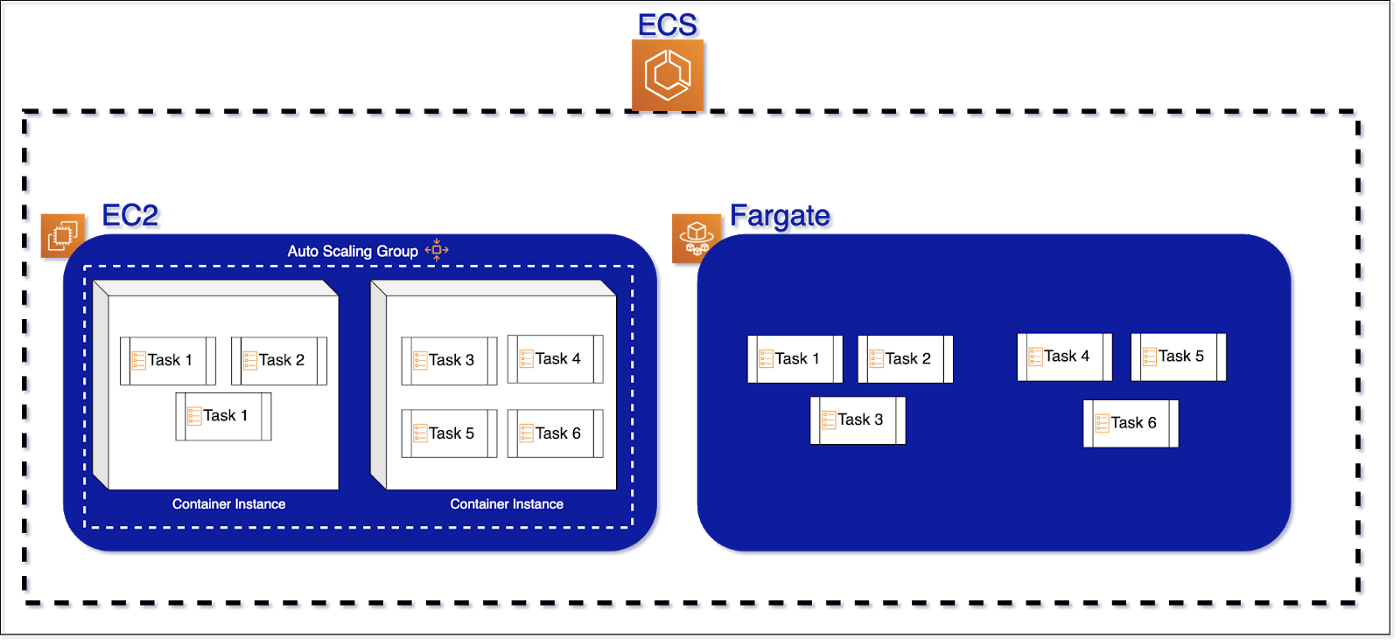

AWS Fargate is AWS’ CaaS solution, compared to Amazon EC2 and AWS Lambda functions which are the more traditional Iaas and FaaS services respectively. Therefore, unlike Amazon EC2, the container infrastructure has already been set up, including the networking, security, and most importantly the scaling. With the service, you simply have to specify the resources for each container instance and let Fargate work its magic under the hood.

Each Fargate instance comes with its dedicated ENI to allow communication between inter-task clusters, whereas clusters of the same task are communicated via localhost. Moreover, the management of these tasks is again done by ECS. Fargate is defined as a compute engine of ECS, providing a different way of managing tasks, and this is the defining characteristic of Fargate linking it to container services.

However, this is only one side of Fargate, there is an entire serverless side too and this comes from the fact that it is built on top of AWS Firecracker. Hence being able to achieve the required auto-scalability fan facilitates the pay-as-you-go model.

Azure Container Instances

Azure became the first cloud vendor to offer a CaaS service when it announced Azure Container Instances (ACI) in mid-2017. The intention, which then resonated with all other vendors in their CaaS offerings, was to simplify developers’ experience of setting up container instances.

ACI allows the set up of isolated containers with pre-configured properties considering security, networking, and storage. For example, all ACIs boost their security capabilities as their individual container model offers protection at a hypervisor level. This makes ACI a great solution for multi-tenant us-cases. Additionally, the billing model follows that of a serverless compute service where adopters of ACI have to only pay for the resources they use in terms of memory and vCPUs per second, similar to that of AWS Fargate.

It must be noted that the pricing model may vary across different resource types that Azure provides. This, however, is also since AWS Fargate restricts you in terms of what container types can be provisioned with no support for special containers such as GPUs, which are available in ACI but are priced differently for obvious reasons.

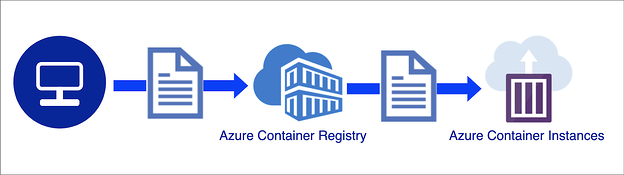

Azure provides a well-integrated ecosystem that can enhance the developer’s experience when adopting ACI. For example, we can leverage Azure Container Registry (ACR) when deploying our container images, similar to the Docker registry. Additionally, there are tools and services such as the Azure Portal, Azure CLI, and Azure Resource Manager templates at our disposal.

Google Cloud Run

The third CaaS service that we should explore is Google Cloud’s Cloud Run. This is the most recent service that was released in this group of CaaS listings, becoming generally available by November 2019. With Google Cloud Run developers can provision stateless containers, similar to the CaaS services provided by the other two vendors. With the service, Google managed to retain the core benefits of serverless while additional flexibility is provided in the form of support for additional programming languages, system binaries, or any set of required libraries.

Even though Google Cloud Run was the last to arrive in the market, Google already was offering a somewhat of a CaaS service in the form of Google Kubernetes Engine (GKE). Being the creators of Kubernetes, it was just natural that Google provides a fully-managed Kubernetes service. Mete Atamel spoke about GKE and how it brings Kubernetes to a serverless platform in his talk at ServerlessDays Istanbul.

The reason why I describe GKE as somewhat CaaS is that according to me, it is more of a Kubernetes as a Service (KaaS) offering rather than CaaS. This is because it does not fully adhere to the pay-as-you-go model. When manually creating a GKE cluster, the nodes and environment are perpetually available, hence incurring costs irrespective of usage. Considering this, Google Cloud Run is more serverless and hence better defined as a CaaS service. Overall when comparing GKE and Google Cloud Run, we see the latter requiring less orchestration and more apt as per the pay-as-you-go model.

Conclusion

As the movement to the cloud continues, cloud vendors are continually innovating to meet the needs of customers. As the industry began to adopt the cloud, container orchestration became a major pain-point of development. To mitigate these issues, we saw the advent of serverless. Even though the novel compute model managed to abstract all infrastructure set-up, it came at the cost of flexibility. Hence, now see the rise of serverless containers, the best of both worlds.

AWS, Azure, and most recently Google Cloud have all entered the field with their respective services. Regardless of the differences in their offerings, we see the common goal resonating between all three. To simplify container orchestration while still retaining the required flexibility to facilitate the multitude of use-cases of developers in their journey to the cloud, and hence advancing cloud adoption in general. After all, the idea of the cloud has been the main focus of software since the past decade, reshaping the way software is built and operated. CaaS simply ameliorates the experience in its innovative ways, immortalizing the great inventor Thomas Edison’s line on innovation: ‘The value of an idea lies in the using of it.’